How La Poste uses Pathway microservices to deliver high-quality ETAs

Sergey Kulik

Sergey KulikHow La Poste uses Pathway microservices to deliver high-quality ETAs

La Poste is France’s national postal service provider, spanning mail, express shipping, banking, and even mobile offerings. Over the centuries, it has become a key player in both logistics and financial services. The network moves parcels through 17 industrial platforms, orchestrates 400-plus truck movements each day, and streams 16 million geolocation points a year.

Every second, hundreds of IoT devices emit data points about the status of operations. Turning that data into reliable ETAs is key to improving efficiency, reducing delays, and avoiding congestion and incidents.

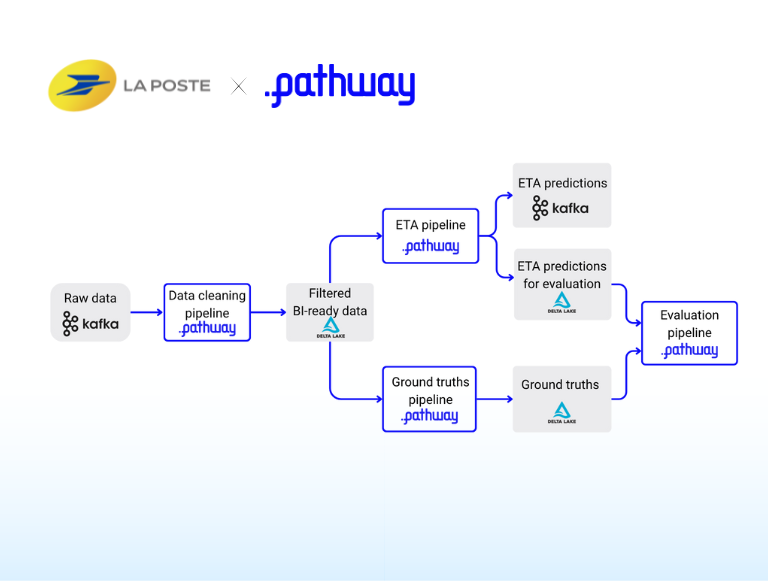

In early 2024, the La Poste Technological Innovation team adopted Pathway’s Python-native streaming engine and stitched Pathway-to-Pathway microservices together over Delta Lake: data preparation, prediction, ground-truth extraction, and evaluation. This created a LiveAI™ layer, effectively a digital twin of the fleet, that turns raw GPS points into sub-second ETAs and real-time anomaly alerts in one continuous flow.

The migration has already cut the IoT platform’s total cost of ownership by 50 % and is projected to reduce fleet CAPEX by 16 %. “It’s a paradigm shift … the ROI is enormous,” says Jean-Paul Fabre, Head of Technological Innovation.

The sections ahead retrace that journey from the initial monolithic prototype to today’s modular pipeline, detailing the trade-offs and efficient scaling techniques.

The Problem: Estimating Arrival Times

Imagine a stream of IoT data generated by hundreds of transport units. This data is published on a Kafka topic and is ingested by Pathway. For simplicity, each data point can be assumed to include a transport unit ID, latitude and longitude, speed, and a timestamp.

Now consider a second stream: ETA requests. Each request contains latitude and longitude, the ID of the assigned transport unit, and a timestamp indicating when the event occurred. This Kafka topic is partitioned so all requests associated with a given transport unit ID follow the intended arrivals sequence.

The goal of the pipeline is to produce estimated arrival times for the requested goods. The following section breaks down the components of this pipeline in more detail.

First Solution: The Monolithic Pipeline

While solving the problem using a single pipeline may seem straightforward, it would involve at least the following components:

- Data cleanup and normalization. For instance, a transport unit may enter a tunnel and report invalid GPS data. A common anomaly is the coordinate (0, 0), which corresponds to a location in the Atlantic Ocean approximately 600 kilometers off the coast of West Africa. Another frequently encountered issue involves duplicated events with identical timestamps that arrive in Kafka later. It is essential to filter out such incorrect or corrupted events to ensure the dataset remains clean and reliable.

- Prediction job. Once the dataset has been cleaned, predictions can be generated from the incoming data. Estimating ETAs is a complex task, requiring consideration of multiple factors such as road networks and conditions, time of day, and historical trends. However, simplified approaches are discussed since this tutorial focuses on microservice architecture.

These two tasks - data preparation and prediction - can be implemented together within a single pipeline. In such a setup, there is no immediate necessity to split them into separate services, which leads to what we refer to as a monolithic pipeline.

Once the core pipeline is in place, it's also important to include mechanisms for continuously evaluating its performance, monitoring the quality of predictions, and quickly detecting any degradation.

Maintaining such a pipeline is challenging.

Using Microservices for a More Production-Grade Pipeline

The previous section covered how to build a monolithic pipeline that provides basic ETAs. Now, it's time to focus on adding the necessary components for quality evaluation, model improvement, and alerting in case of a problem.

To enable this, a source of ground truths is required. These can be derived from the processed data stream: when an event indicates a transport unit has reached its designated target location, it can be interpreted as a completed delivery. The corresponding timestamp then serves as the actual arrival time - or ground truth - for evaluation. This brings us to a reason for introducing a split in the previously described pipeline: the data preparation process and the prediction logic are now decoupled. The cleaned and normalized data is reused, not only for prediction but also for ground truth detection. Separating these responsibilities makes it easier to manage, scale, and evolve each part independently.

This mechanism supports multiple use cases handled by a separate Pathway instance. One key application is monitoring the quality of predictions. Several other processes also play a vital role in maintaining the overall pipeline. Although these are not discussed in detail here, they are briefly covered later in the "Going further" section.

This architecture comprises four pipelines: data preparation, prediction, ground truth calculation, and evaluation. The primary advantage of this microservice-style design lies not only in its simplicity but also in its flexibility—for example, experimenting with a new prediction model requires updating only one component of the system.

Key components

This section describes the four main components of the pipeline. Since including every detail would be impractical, only the most relevant and illustrative pieces of code are provided. The aim is to show how a Pathway pipeline can be decomposed into several smaller, more manageable pipelines.

Data Acquisition and Filtering Pipeline

Assume a Kafka topic contains a stream of data points emitted by various transport units. The goal of the first pipeline component is to read this data, clean it, and prepare it for the prediction stage.

First, a schema must be defined. As outlined in the previous section, each event contains the following fields: latitude, longitude, transport unit ID, speed, and a timestamp (expressed as a UNIX timestamp). The schema may be structured as follows:

class InputEntrySchema(pw.Schema):

transport_unit_id: str

latitude: float

longitude: float

speed: float

timestamp: int

Since the data originates from a Kafka source, it can be read using pw.io.kafka.simple_read for simplicity. The more robust pw.io.kafka.read would typically be used in a production environment, allowing detailed configuration of rdkafka settings and other parameters. However, for demonstration purposes, the simpler version is sufficient:

input_signals = pw.io.kafka.simple_read(

os.environ["KAFKA_IOT_SERVER"],

os.environ["KAFKA_IOT_SIGNALS_TOPIC"],

schema=InputEntrySchema,

format="json",

)

Once the data is ingested, it must be cleaned. This involves filtering out erroneous records, such as those with invalid GPS coordinates. One common issue is the presence of events with coordinates (0, 0) - a location known as Null Island, which clearly indicates corrupted or incomplete data. A basic filtering step might look like this:

filtered_signals = input_signals.filter(

pw.this.latitude != 0 or pw.this.longitude != 0

)

Additional filters can be applied in a similar chain to handle other anomalies, such as duplicate events or inconsistent timestamps.

After applying the necessary filters and transformations, the cleaned data can be persisted for downstream processing. Delta Lake is a suitable choice for its simplicity and flexibility. It supports a variety of storage backends - including local file systems and S3 - and does not require any background services or binaries, making it a lightweight yet efficient option.

To write the cleaned data to Delta Lake:

pw.io.deltalake.write(

table,

os.environ["PREPARED_DATA_DELTA_TABLE"],

)

At this point, the data is cleaned and ready for use by other components in the pipeline.

Prediction Pipeline

With the prepared table now containing clean data, free of zero GPS coordinates, delayed events, duplicates, and other anomalies, a separate process can be implemented to perform predictions. This prediction process runs independently, parallel to the initial data preparation pipeline.

To begin, the table must be opened. Pathway provides a simplified method for using Delta Lake as the output connector. Delta Lake allows storing schema as part of the table's metadata. Pathway handles this end-to-end: when writing, it saves the schema automatically; when reading, it retrieves and applies it without requiring manual intervention.

Given this setup, the cleaned data table produced by the first process can be loaded as follows:

signals = pw.io.deltalake.read(os.environ["PREPARED_DATA_DELTA_TABLE"])

The table containing ETA requests can be read separately. Depending on the implementation, this data may be stored either in Delta Lake or in Kafka. The exact choice is typically less critical, as experience shows that GPS tracking data tends to be more problematic than the request stream.

If the requests are read from Kafka, the process starts by defining the schema, followed by reading the stream using a method such as:

class RequestsSchema(pw.Schema):

request_id: str

transport_unit_id: str

latitude: float

longitude: float

requests = pw.io.kafka.simple_read(

os.environ["KAFKA_IOT_SERVER"],

os.environ["KAFKA_REQUESTS_TOPIC"],

schema=RequestSchema,

format="json",

)

Once the ETA requests are read, the prediction logic can be implemented - for example, by estimating the ETA based on the current location of each transport unit. More advanced approaches are also possible; for instance, a custom reducer can accumulate requests per transport unit and predict the ETA based on the sequence of upcoming target points. This logic can be encapsulated within a method named build_predictions, which takes the table of prepared, cleaned signals and the table of ETA requests as input and returns a table containing the computed predictions.

predictions = build_predictions(signals, requests)

After the prediction logic is implemented, and the resulting predictions are stored in a predictions table that updates as new data arrives, these predictions can be persisted in a separate Delta Lake table for downstream evaluation. Additionally, the predictions should be published back to the requester's Kafka topic to ensure real-time arrival tracking.

This results in code similar to the following:

pw.io.kafka.write(predictions, rdkafka_settings(), os.environ["KAFKA_PREDICTIONS_TOPIC"])

pw.io.deltalake.write(predictions, os.environ["PREDICTIONS_DELTA_TABLE"])

Ground Truth Computation Pipeline

The ground truth computation pipeline is essential for evaluating the quality of predictions. Once the predicted values and the actual outcomes are available, they can be compared to assess accuracy. This is especially important for monitoring: if performance metrics deteriorate following a deployment, it becomes clear that something may have gone wrong. Without this feedback loop, the system would be operating blindly, making ground truth computation useful and necessary.

This component can be implemented similarly to the others. A Pathway process is created that reads both the IoT signals and the ETA requests. A custom reducer can then be used to monitor each transport unit's expected arrivals. When a transport unit's location matches the destination of an order and there are no other pending deliveries, the order can be marked as completed, providing a ground-truth timestamp.

This logic must run in a separate process. While prediction is done in real time, ground truth events occur at an unknown point in the future, possibly 30 minutes or even several hours after the prediction is made. By decoupling the ground truth computation from the prediction process, the system remains responsive and scalable, without blocking or delaying predictions. As in the previous section, a table of prepared signals and a table of ETA requests are required. With these inputs, the ground truth computation logic can be implemented within a build_ground_truths method.

ground_truths = build_ground_truths(signals, requests)

Once computed, the ground truths can be written to a Delta Lake table for further evaluation:

pw.io.deltalake.write(ground_truths, os.environ["GROUND_TRUTHS_DELTA_TABLE"])

Evaluation of the Predictions Pipeline

At this stage, both the prediction and evaluation processes are in place. The next step is to read data from these two sources and join them using the order_id field. Once joined, each pair of records contains both the predicted and the actual arrival time. This information allows the deviation between the two to be calculated and written to a new table.

It can be assumed that the schema for prediction and ground truth entries is the same. In this case, they can be read using the Delta Lake connector as follows:

predictions = pw.io.deltalake.read(os.environ["PREDICTIONS_DELTA_TABLE"])

ground_truths = pw.io.deltalake.read(os.environ["GROUND_TRUTHS_DELTA_TABLE"])

One important consideration is that, for a single ETA request, multiple predictions may be generated over time as new data becomes available. This means a strategy is needed to aggregate these predictions when evaluating accuracy. A basic approach would be to compute the Mean Absolute Error (MAE) across all records. However, MAE alone does not account for how prediction accuracy varies with the time or distance remaining before arrival. A more descriptive evaluation might involve calculating MAE within specific buckets, such as predictions made less than 5 minutes before arrival, between 5 and 30 minutes, and beyond.

In practice, many different metrics could be useful depending on the context. A flexible solution was adopted in our La Poste use case: the Pathway ETL task is responsible only for joining the predictions and ground truths and calculating the raw error for each individual prediction. These results are then stored in a Postgres database, and the statistical analysis and visualization are handled separately in a BI tool. This separation of concerns provides greater flexibility in monitoring and evaluation.

The raw evaluation data can be exported in various formats. For example, it can be written to a CSV file for offline analysis using tools like Pandas or Excel. It can also use a Postgres database, as pointed out above:

evaluation = evaluate_predictions(predictions, ground_truths)

pw.io.csv.write(evaluation, os.environ["EVALUATION_RESULTS_TABLE"])

pw.io.postgres.write(evaluation, get_postgres_settings(), os.environ["EVALUATION_POSTGRES_TABLE"])

Alternatively, a custom output connector can stream the data directly into Grafana or another monitoring tool for real-time visualization.

The core pipeline is complete. In the next section, the advantages and trade-offs of this modular architecture will be discussed, including when such decomposition is most beneficial and how to address scaling challenges effectively.

Observations

Whether to decompose a system into multiple pipelines or keep it as a single unit is an old question. It closely resembles the debate between monolithic and microservices architectures. In this section, we will discuss the pros and cons of splitting the pipeline and insights from our experience implementing this approach for La Poste's ETA prediction task, where such decomposition proved effective.

Microservices Benefits

By implementing this decomposition into different microservices, several clear advantages were observed:

- Improved scalability. Pathway supports configurable parallelism, allowing each pipeline to scale independently. This means that only the components under load, such as the prediction pipeline, need additional resources without affecting others, like alerting or evaluation.

- Access to intermediate data. With clearly separated stages, inspecting and analyzing intermediate outputs becomes easier. This is particularly helpful for tracing the source of bad data and debugging complex behaviors.

- Enhanced fault tolerance. Isolating each pipeline ensures that failures in one component, such as a runtime exception in the evaluation pipeline, do not cascade or disrupt other processes, like predictions. This separation leads to a more robust and resilient system overall.

New Challenges

While the benefits of using microservices are significant, several challenges do arise and need to be addressed:

- Increased maintenance overhead. Changing the schema of an intermediate table produced by one pipeline often requires synchronized updates in downstream pipelines, and sometimes even adjustments to historical data.

- More involved deployment process. Instead of deploying a single monolithic pipeline, multiple components (in our case, four) need to be deployed, monitored, and coordinated. This adds operational complexity during updates or rollbacks.

- Higher storage requirements. Storing intermediate results, such as the cleaned data, incurs additional storage costs. This trade-off needs to be considered, especially for high-volume streams.

Scaling

As you may have noticed, the architecture described above uses Delta Lake as the storage layer for intermediate data. Delta Lake is a very convenient solution—it doesn't require deploying additional services and can operate directly over backends like S3. However, there are a few nuances worth keeping in mind.

One key consideration is how data is ingested and stored. In our case, data is read from Kafka with low latency and written to Delta Lake in batches. These writes happen fairly often; each commit generates a new Parquet file and an update to the transaction log. Over time, this results in many small files and metadata entries, which can eventually lead to performance degradation.

This isn't a problem, but it requires proper handling. The main technique to manage this growth is partitioning - note that this differs from Kafka partitioning. In Delta Lake, partitioning involves designating one or more columns whose values determine the directory structure in which files are stored. For example, you can derive a "day" column from the timestamp field and use it as a partition key via the partition_columns parameter in pw.io.deltalake.write.

Then, if the partitioning is based on a daily scale and the partitioning column is named timestamp_day, the output configuration would look as follows:

pw.io.deltalake.write(

table,

"/path/to/lake",

partition_columns=[table.timestamp_day], # enable partitioning by `timestamp_day`

)

Partitioning enables several optimizations: files within a partition can be compacted over time, reducing fragmentation and improving query performance. Similarly, the Delta transaction log can be periodically cleaned up - after data is compacted and old versions become obsolete, they can be safely removed.

These maintenance strategies allow the system to remain efficient and manageable, even when operating continuously over long timeframes - months or even years.

Going further

The microservice-style architecture not only enabled the construction of the primary pipeline but also unlocked several opportunities to reuse computation results and build additional production services with minimal effort:

- Anomaly detection and alerting. If the predictions deviate significantly from the ground truth, this may indicate an issue, such as a buggy deployment or unexpected data shift. A separate Pathway pipeline can monitor for anomalies and trigger alerts via a Slack connector or other notification channel.

- Prediction model improvement. Combining cleaned input data and ground truth values forms a high-quality dataset ideal for training improved prediction models. This data can be collected over time and used offline to refine and validate new approaches.

- A/B testing. When experimenting with a new prediction strategy, running it in parallel with the existing one is easy. For example, transport units can be sharded by their IDs, assigning a fixed percentage to the stable model and the rest to the experimental one. Since the architecture supports running multiple pipelines simultaneously, this testing becomes straightforward.

Conclusions

Of course, microservice architecture is not a silver bullet. It should be adopted thoughtfully, carefully considering whether it's appropriate for the specific task at hand. When used wisely, it can be a powerful tool for simplifying complex data workflows and increasing system reliability.

This article uses a real-world example from our work with La Poste to show how a Pathway pipeline can be effectively split into multiple microservices. You now know how to synchronize pipelines using Delta Lake for Pathway-to-Pathway communications, understand the trade-offs involved, and manage potential challenges such as intermediate data growth. With this foundation, you're better equipped to design scalable and maintainable streaming architectures with Pathway.

If you need any help with pipelining, feel free to message us on Discord or submit a feature request on GitHub Issues.