Kafka vs RabbitMQ for Data Streaming

Anup Surendran

Anup SurendranKafka vs RabbitMQ for Data Streaming

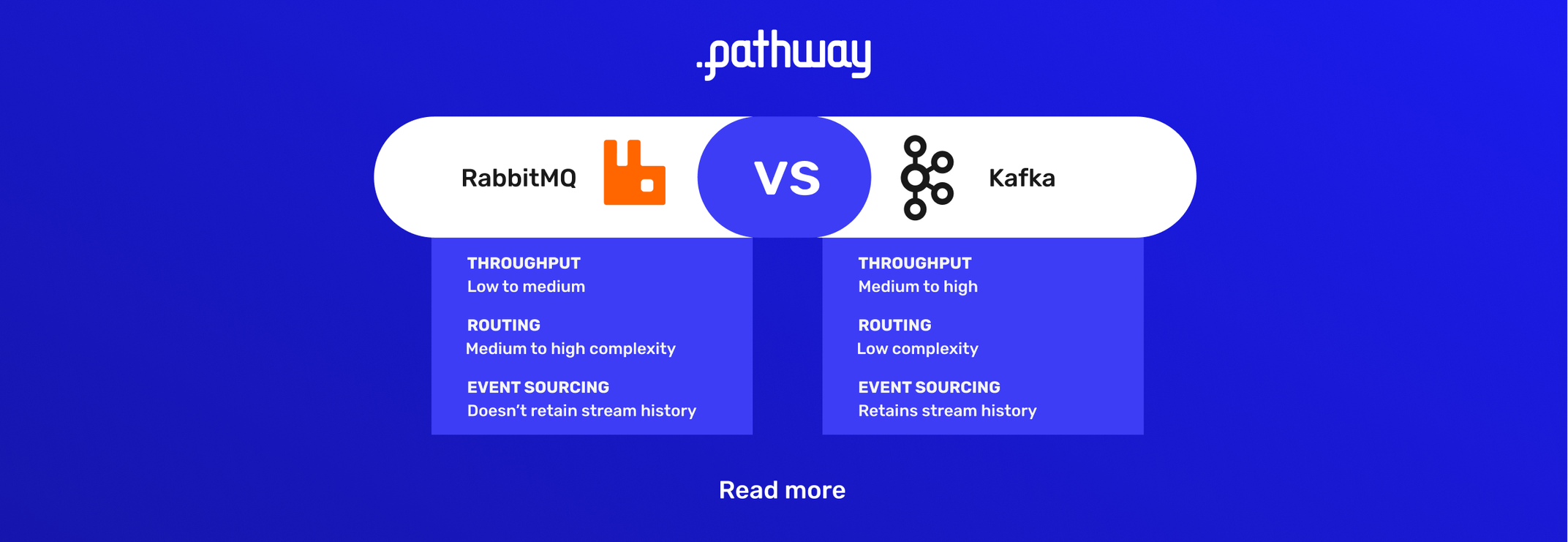

This article will explain the difference between RabbitMQ and Kafka. The article will walk through the strengths and weaknesses of each platform so that you can choose the right solution for your data streaming use case.

Before explaining the difference between RabbitMQ and Kafka and when to use each technology, it is important to understand the difference between a message broker and a distributed streaming system.

Message Broker vs Distributed Streaming System

What is a Message Broker?

A message broker helps exchange messages across systems and applications. A message broker is usually used to handle background jobs, long running tasks, or act as a translator between applications (including microservices). An example of a long running task is that you have to create a large PDF file programmatically by reading and formatting a lot of data from your data source. The reading and transformation of that data and writing into a file could take quite some time. A message broker in this case could help with a "fire and forget" scenario, where the requester does not have to wait for the creation of the file and that job can happen in the background.

A message broker helps broker messages by supporting different protocols. Some of the most popular protocols they support are Advanced Message Queuing protocol (AMQP), protocol for Machine to Machine (M2M) and Internet of Things (IoT) messaging (MQTT), Simple Text Oriented Messaging Protocol (STOMP).

RabbitMQ is a general-purpose message broker.

What is a distributed streaming system?

A distributed streaming system can process multiple data streams simultaneously. This allows for creating high throughput data ingestion and data processing pipelines. Streaming systems are used for large amounts of data, processing data in real-time or to analyze data over a time-period. The distributed architecture ensures a fault-tolerant publish-subscribe messaging system.

Kafka is a distributed streaming system.

When should I use RabbitMQ vs Kafka?

| Use cases | RabbitMQ | Kafka |

|---|---|---|

| Throughput | Low to Medium For high volume processes, the resource utilization is very high (you would need a minimum of 30 nodes). | Medium to High (throughput of 100K/sec events and more) Kafka uses sequential disk I/O to boost performance. It can achieve high throughput (millions of messages per second) with limited resources, a necessity for big data use cases. |

| Routing | Medium to high complexity routing rules | Low complexity routing rules |

| History | Does not keep messaging history | Meant for applications requiring stream history. Provides the ability to "replay" streams. |

| Event Sourcing | Not explicitly built with event logs. | Event logs are supported. Temporal queries are supported: You can determine the application state at any point in time. |

| Multi-stage processing | Usually for single stage processing | Data processing can be done in multi-stage pipelines. The pipelines generate graphs of real-time data flows. |

| Latency | For low throughput RabbitMQ performs better E.g. For a reduced 30 MB/s load optimally configured RabbitMQ gives you a 1 ms latency | For high throughput Kafka latency is better. E.g. 5 ms (200 MB/s load) |

Once you understand the type of use cases which each of these systems support, it is worthwhile to understand what the messaging architecture looks like. The following table will give you an understanding of the design elements - messaging, data processing, topology and redundancy of these two systems and can further guide you with the right technology selection.

| Design element | RabbitMQ | Kafka |

|---|---|---|

| Message Retention | Acknowledgment based To be unqueued, the messages are returned to the queue on negative ACK and saved to the consumer on positive ACK. | Policy-based (e.g., 30 days, 60 days etc) While Kafka uses a retention time, any messages that were retained based on that period are erased once the period has passed. |

| Message Priority | Messages can be given priority with the help of a priority queue. | All messages have the same priority, which cannot be altered. |

| Message delivery guarantee | Doesn’t guarantee atomicity, even in relation to transactions involving a single queue. | Retains order only inside a partition. In a partition, Kafka guarantees that the whole batch of messages either fails or passes. |

| Data Processing | Transactional | Operational |

| Consumer type | Smart broker/dumb consumer.The broker consistently delivers messages to consumers and keeps track of their status | Dumb broker/smart consumer. Kafka doesn’t monitor the messages each user has read. Rather, it retains unread messages only, preserving all messages for a set amount of time. Consumers must monitor their position in each log. |

| Topology | Exchange type: Direct, Fan out, Topic, Header-based | Publish/subscribe based |

| Payload Size | No constraints | Default 1MB limit. Designed ideally for smaller messages |

| Data Flow | Uses a distinct, bounded data flow. Messages are created and sent by the producer and received by the consumer. | Uses an unbounded data flow. The payload usually consists of key-value pairs that continuously stream to the assigned topic. |

| Redundancy | Use a round-robin queue to repeat messages. To boost throughput and balance the load, the messages are divided among the queues. Additionally, it enables numerous consumers to read messages from various queues at once. | Uses partitions. The partitions are duplicated across numerous brokers. In the event that one of the brokers fails, the customer can still be served by another broker. |

| Messaging protocols supported | STOMP, MQTT, AMQP, 0-9-1 | Binary over TCP |

| Authentication | RabbitMQ supports Standard Authentication and Oauth2. | Kafka supports Oauth2, Standard Authentication, and Kerberos. |

| Languages supported | Supports Python, Ruby, Elixir, PHP, Swift, Go, Java, C, Spring, .Net, and JavaScript. | Supports Node js, Python, Ruby, and Java. |

Summary

In summary, Kafka is useful for high-throughput, cost-efficient streaming use cases. RabbitMQ is useful for low to medium throughput messaging with complex routing and a variety of consumers.

For the diehard RabbitMQ developers, RabbitMQ has a new data structure modeling an append-only log, with non-destructive consuming semantics. This new data structure (https://www.rabbitmq.com/streams.html) will be an interesting addition for RabbitMQ users looking to enhance their streaming use case. This is only available in RabbitMQ3.9 and above.

References

https://dl.acm.org/doi/10.1145/3093742.3093908

For message or stream processing analytics please check out this simple example on performing simple machine learning on Kafka.