Build LLM/RAG pipelines with YAML templates by Pathway

Saksham Goel

Saksham GoelBuild LLM/RAG pipelines with YAML templates by Pathway

Exciting news! Pathway introduces a new feature that allows you to build Large Language Model (LLM) Apps using YAML configuration files. This enables you to create production-ready RAG pipelines tailored to your needs—all without the hassle of writing Python code. Think of YAML templates as customizable templates with parameters that can be inherited by pipelines, making it easier for you to set up new projects or update multiple configurations.

Simplify Your LLM Pipeline Configuration

Pathway allows you to put AI applications in production which offer high-accuracy RAG at scale using the most up-to-date knowledge available in your data sources. Configuring LLM pipelines traditionally involves altering Python scripts, which can be time-consuming and requires programming expertise within your team. With the new YAML configuration approach, you can:

- Easily adjust settings: Change parameters and settings directly in a human-readable YAML file.

- Swap components effortlessly: Replace or modify components like data sources, LLM models, and indexers without code changes.

- Keep configurations organized: Use variables and tags in YAML to maintain clean and manageable configuration files.

Learn More

To get started and see examples of how to customize your LLM templates with YAML, visit our detailed guide:

Customizing LLM Templates with YAML Configuration Files

Customize Your Favorite Pipeline with YAML templates

Pick one of the application templates provided that suits you best. You can use it out of the box, or change some steps of the pipeline using YAML configuration files - for example, if you would like to add a new data source, or change a Vector Index into a Hybrid Index, it's just a one-line change.

| Application (llm-app pipeline) | Description |

|---|---|

Question-Answering RAG App | Basic end-to-end RAG app. A question-answering pipeline that uses the GPT model of choice to provide answers to queries to your documents (PDF, DOCX,...) on a live connected data source (files, Google Drive, Sharepoint,...). |

Live Document Indexing (Vector Store / Retriever) | A real-time document indexing pipeline for RAG that acts as a vector store service. It performs live indexing on your documents (PDF, DOCX,...) from a connected data source. It can be used with any frontend, or integrated as a retriever backend for a Langchain or Llamaindex application |

Multimodal RAG pipeline | Multimodal RAG using MLLM in the parsing stage to index PDFs. It is perfect for extracting information from unstructured financial documents in your folders (including charts and tables), updating results as documents change or new ones arrive. |

Slides AI Search | Multi-modal search service that leverages GPT-4o for PowerPoint and PDF presentations. Content is parsed and enriched with metadata through a slide parsing module powered by GPT-4o, with parsed text and metadata stored in an in-memory indexing system that supports both vector and hybrid indexing. |

Adaptive RAG App | A RAG application using Adaptive RAG, a technique developed by Pathway to reduce token cost in RAG up to 4x while maintaining accuracy. |

Private RAG App with Mistral and Ollama | A fully private (local) version of the question_answering_rag RAG pipeline using Pathway, Mistral, and Ollama. |

How It Works

Pathway uses a custom YAML parser to map your configurations to Python objects and functions. This means you can:

- Initialize Objects: Instantiate classes directly within the YAML file by using map tags .

- Reference Enums: Set values of enum arguments using YAML tags.

- Define Schemas: Create data schemas inline using helper functions.

- Use Variables: Declare variables for reuse throughout your configuration to keep it concise and maintainable.

Are you looking to build an enterprise-grade RAG app?

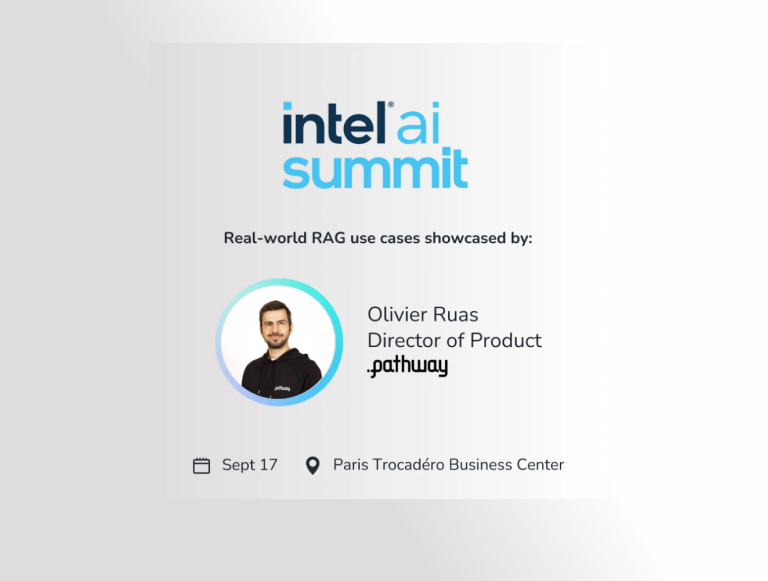

Pathway is trusted by industry leaders such as NATO and Intel, and is natively available on both AWS and Azure Marketplaces. If you’d like to explore how Pathway can support your RAG and Generative AI initiatives, we invite you to schedule a discovery session with our team.

Schedule a 15-minute demo with one of our experts to see how Pathway can be the right solution for your enterprise needs.

Conclusion

Whether you're a seasoned developer or just starting out, Pathway's customizable LLM templates with YAML configuration enable you to build, customize, and deploy powerful LLM pipelines with ease. We can't wait to see what you'll create!

Join the Community and share your experiences by joining our community forum. Join Pathway's Discord server (#get-help) and let us know how the Pathway community can help you.

For any questions or feedback, don't hesitate to reach out to us at contact@pathway.com!