Implementation of RAG for Production Use Cases

In this module, you will explore how RAG can be implemented for production use cases. The content here is designed to help you understand the real-world applications of RAG, going beyond the basics, and preparing you for challenges in production environments.

To dive deeper, check out this video by Saksham.

Key Topics Covered in the Video:

- LLM + RAG Architecture: You’ll learn how enterprises can use public LLMs combined with RAG to improve response accuracy by integrating private, internal data. This section also covers the challenges of working with corporate-specific data that public LLMs cannot access.

- Challenges in Production Deployments: This section discusses the common issues developers face when scaling LLM + RAG solutions, including: -- Managing real-time data ingestion from multiple sources. -- Ensuring compliance with data governance policies. -- Overcoming issues related to the quality and accuracy of LLM outputs, such as inconsistencies and hallucinations.

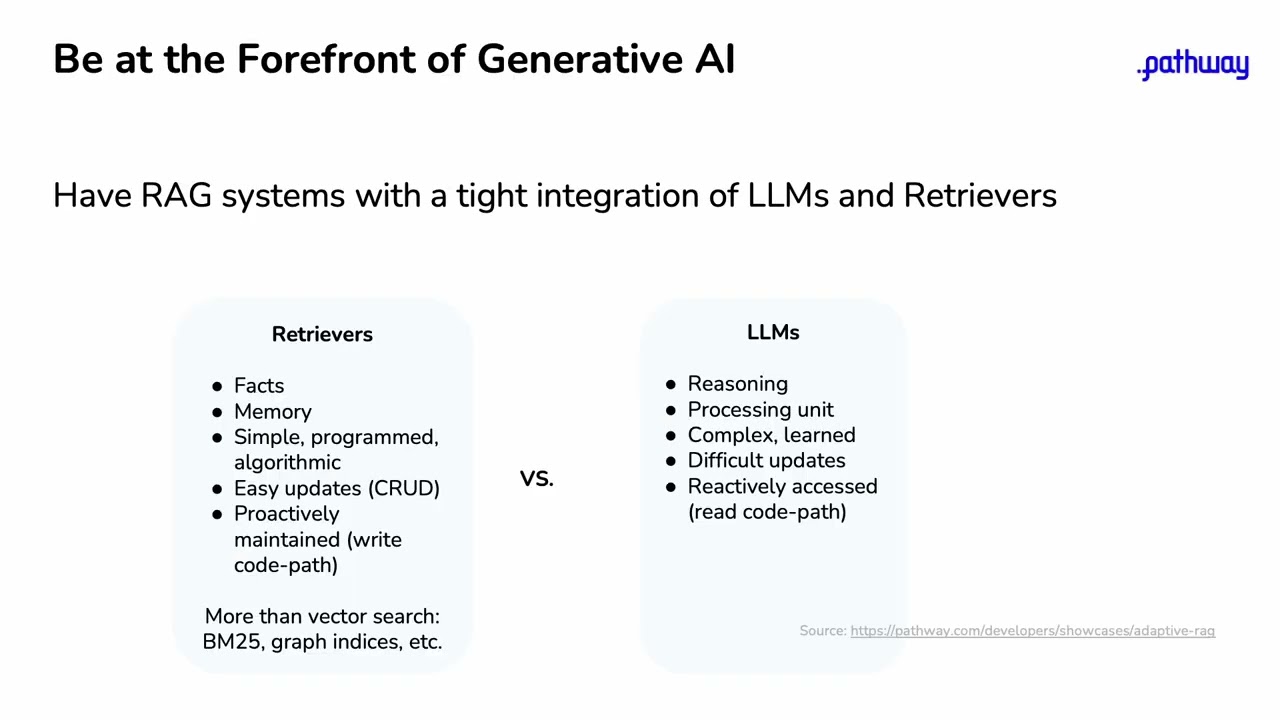

- Optimizing RAG Systems for Enterprises: Learn how retrievers and LLMs need to be tightly integrated and optimized together for the best performance. This part of the video emphasizes the importance of cost-efficient adaptive integration, where the system calls on retrievers only when needed to minimize resource consumption.

- Building Live Integrated LLM + RAG Systems: The video demonstrates how to build an end-to-end RAG architecture that handles real-time data from sources such as Salesforce, SharePoint, PostgreSQL, and other APIs. You'll see how acquiring and using knowledge can be streamlined in real-time AI systems.

- The Unified Approach to RAG: Instead of relying on disconnected components, the video advocates for a unified architecture where all essential parts—data ingestion, embedding models, ranking, and inference—are integrated into a single system. This approach simplifies scaling and deployment, ensuring better accuracy and easier maintenance.